A game of class with phases consists of

$$

The notion of "class" is just a way to talk about various assumptions around

the functions we use. In most cases, $\mathfrak{c}$ will be randomized functions,

that are efficiently computable (i.e. $\mathcal{O}(\lambda^d)$). Talking about

a class of functions is a good way to sweep all of our theoretical concerns

about modelling computation under the rug. We can easily describe our class

of functions using lambda calculus, generalized recursive functions,

Turing machines, etc.

While perhaps obtuse at first, this actually matches the vague way games are

usually defined. For example, the semantic security game:

$$\begin{aligned} &k \xleftarrow{R} \mathcal{K} &&\cr && \xleftarrow{m_0, m_1} &\cr &b \xleftarrow{R} {0, 1} &&\cr &c \longleftarrow E(k, m_b) &&\cr && \xrightarrow{c}\cr && \xleftarrow{\hat{b}} &\cr \end{aligned}

$$

This notation implicitly fits this paradigm. We send and receive messages,

with a bit of randomized computation in between. The reason we have multiple

states is mainly to reflect that fact that we have a "memory" between different

phases. In most cases, each state type will encompass the previous ones:

$C_i \subseteq C_{i + 1}$, reflecting the fact that we retain memory of previously

defined variables.

Sometimes, we are required to send a message by the formalism, but have nothing

interesting to say, such as at the beginning of the previous protocol.

This is reflected with a message type of $\{\bullet\}$, which can implicitly

be omitted.

Because of this, a game with $N$ phases can always be extended to a game

with $M \geq N$ phases, simply by sending trivial messages

and having trivial states in $\{\bullet\}$. We thus take no qualm

as considering all games to have some polynomially bounded number

of phases $N$.

# Adversaries

An adversary $\mathscr{A}$ of class $\mathfrak{c}$ for $G$ consists of

- State types $A_0, \ldots, A_N : \mathcal{U}$

- A starting state $a_0 : A_0$

- Transition functions (of class $\mathfrak{c}$): $$

\mathscr{A_i} : A_i \times R_i \xrightarrow{\mathfrak{c}} A_{i + 1} \times Q_{i + 1}$$Note that the parameters here are dictated by the game, whereas the class of adversaries isn't. We can have adversaries that aren't polynomially bounded, although most games won't be secure against that strength.

An adversary together with a game as an explicit model for the implicit syntax of game commonly used, which is what I like about this formalism.

A morphism between games of class with phases consists of

For any adversary of , this creates an adversary of , via:

Essentially, even though a morphism is specified with a few small working parts, we immediately get a function which turns any adversary of into an adversary of . Note that this works regardless of the conditions imposed on the initial adversary. Naturally, if we want to be efficient, then needs to be efficient as well. If is randomized, then is necessarily randomized as well.

Once again, the implicit notation we use in informal security games is accurately modeled by this formalism. This models the common situation where we create a new adversary by "wrapping" another adversary. We massage their queries into a new form, and massage the responses into a new form as well. At each point, we're allowed to do some computation, and to hold some intermediate state of our own.

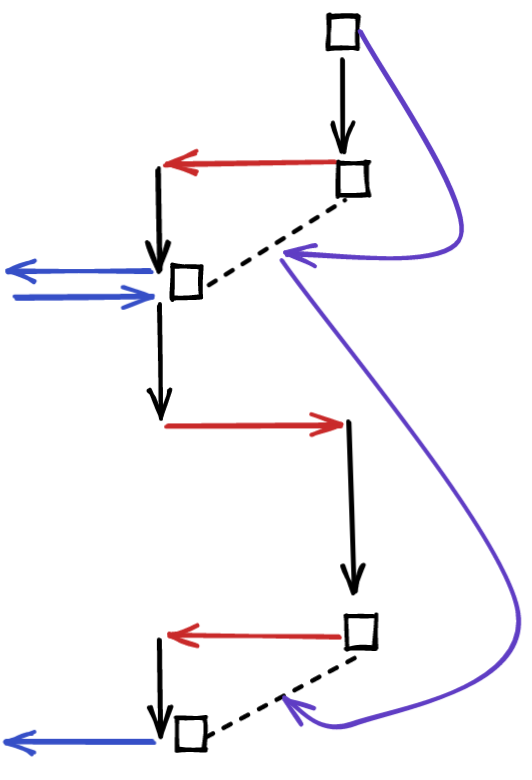

Diagrammatically, this looks like this:

One slight issue with the notion of morphism we have so far is that it encompasses many morphisms which are effectively the same. For example, if we add dummy variables to the helper states, the outcome of our wrapper is the same, yet it is technically different.

To amend this, we consider two morphisms to be equivalent if the responses and queries produced by and are the same between both morphisms.

The identity morphism has no helper states, and simply forwarding the queries and responses without any change.

To compose morphisms and with states and , our states become the product . This immediately suggests the following composition rule:

It's relatively clear, although I haven't gone through the pain of proving it, that this composition rules along with the identity morphism satisfy the appropriate rules for a category.

Let's call this .

We need to augment games with a notion of "winning". To do this, we have a function , which takes a probability distribution over the final state, and returns an advantage in .

Given a game and an adversary for that game , of a common class , it's clear that we have a function , given by composing the transition functions of the challenger and the adversary together. Since the class functions is potentially randomized, we can run it over , and arrive at a probability distribution over the result . In order to define the advantage of this adversary, we use this function over the resulting distribution. As a short-hand for this process, we write:

In practice, is going to be very simple. Usually, it will of the form , but we can consider more complicated things.

Such augmented games might be called "Security Games".

We say that a game has security , if for all adversaries of class , is a negligible function of some security parameter related to the class. In the usual case, we have the class of randomized computable functions with polynomial runtime in . This is commonly referred to as "computational security". We also have "statistical security", which considers adversaries that are unbounded in their runtime.

[!note] Note: At the end of the day, we only really care about whether or not the advantage is negligible, which means, essentially, that it's arbitrarily close to .

Morphisms are morphisms of games , with the additional condition that for every adversary of , the induced adversary of has an advantage at least as large, up to a polynomial factor:

Here, $\p$ is a polynomial function of the ambient security parameter

. Since we're talking about games of class , we already

have an ambient security parameter, since we can talk about the efficency

of the challenger.

This condition works well with composition, and naturally includes the identity morphism we've seen previously. The polynomial factor loosens the restrictions, while still making it so that if is negligible, then so is is as well.

We call such a morphism a "reduction".

[!note] Note: Here I use reduction in the sense "the security of G reduces to the security of G'", i.e. "G is secure, assuming G' is secure", i.e. "if G is broken, then G' is broken". The direction of the morphism indicates us being able to use an adversary breaking to make an adversary breaking .

Sometimes, the notion of reduction uses the opposite direction.

This condition is actually necessary. In practice, most games are reasonably defined, and a morphism usually has a trivial proof of "advantage growth". Unfortunately, some games are defined in a stupid way. For example, you can have a game in which only trivial messages are exchanged, and yet always returns . There's a trivial morphism of games, and yet, no matter what the advantage of the adversary for the original game is, the advantage for this trivial game remains . Because of this, in general we need to impose the restriction of advantage growth to define reductions.

The reason we allow for the negligible factor is to allow for games with negligible differences to be considered isomorphic.

Let's call the category of security games and their reductions

There's evidently a forgetful functor , by stripping away the elements related to advantage.

It also seems that there's a free functor .

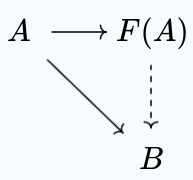

Now, the standard situation for such a functor would be:

i.e. we have a morphism , such that for each morphism , we have a reduction .

Now, the obvious choice for is to use the same game, and pick the advantage elements in a judicious way. We can clearly use the morphism for a morphism . We just need to make sure that the advantage grows. A simple way to guarantee this is to make the advantage of to be , no matter what.

Thus, like in the trivial game, for . always returns , with

Given two games , , you can define the tensor product , by using states , queries , , and transition functions .

This represents parallel composition of games. An adversary has to play both games simultaneously.

The natural advantage definition here is to define . This is well defined, because we have a distribution over , resulting in individual distributions for each part, where we can use the individual advantages.

This makes it so that if the adversary has a negligible advantage in one of the games, then they have a negligible advantage in this game as well.

I suspect, but have yet to prove, that there's a symmetric monoidal structure on this category, using the tensor product of games, and the trivial game as unit.

Given a function , we'd like to model an Oracle implementing this function as a kind of Game, so that it fits into our definition.

We start with a two phase game:

Now, here's a bit of a technical point, we define , This means that adversaries have zero advantage unless they can answer all queries. This lets us fit perfect oracles into our model of games.

[!note] Note: One implicit assumption is that is efficiently sampleable. For most oracles you'd actually care about, this is going to be the case, but I wonder if you can get around this technical limitation.

We reverse the roles of the challenger and the adversary in this situation, to a certain degree. The oracle is actually the adversary here! This is so that when we have a reduction from an oracle, we can send queries to the oracle and receive back responses. This also allows on using oracles which are not necessarily efficient, because reductions wrap around all adversaries, not merely the efficient ones, since a reduction itself makes no reference to the internal operation of an adversary.

Now, often, we want to allow multiple queries to the oracle, We can achieve this by adding more random queries, and letting the adversary win only if they can answer all queries correctly.

We might also want to make multiple queries in a single phase. We can achieve this by sampling multiple random points at once, sending them all, and receiving multiple responses, which we require to all be correct.

Another technicality is that we want to be able to not query the oracle at a given phase. When we compose the oracle in parallel with another game, we'd like to be able to not have to make an oracle query at every phase.

The essence of achieving this is to be able to query a trivial point , and for the adversary to respond with a trivial response , naturally, we would like the adversary to not be able to win by responding otherwise.

With . Note that we consider to always be false. Because, once again, this only gives a non-zero advantage to adversaries which are always correct, this correctly captures the notion that an adversary with a non-trivial advantage at this game is an oracle for the function .

We can extend this in a natural way to multiple phases, and multiple queries in a single phase, and encapsulate all of this under an oracle game , for any function .

With this grunt work done, we actually have a very neat way to use oracles. For example, if reduces to , provided we have access to an oracle for , then we can simply say:

The tensor product does exactly the right thing! We're constructing an adversary for B, and we're wrapping an adversary for . But, at each stage, we can optionally query the oracle, and query it as many times as we want during each phase. And, because we're oblivious to kind of adversary we have for , this works even for functions where no efficient oracle exists.

And, once again, this merely formalizes the syntax we implicitly use when querying oracles inside of security notation diagrams.

Because we have a notion of morphism between Security Games, i.e. a reduction, we can consider limits and other categorical objects inside this category.

There's a trivial security game wherein only trivial messages are sent, and in which we set the advantage to always be . Clearly, this object is terminal in the category of security games. We can create a morphism in the right direction, simply by ignoring the adversary, and always sending trivial messages. And, since the advantage is , advantage growth clearly holds as well.

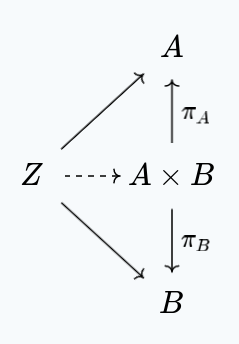

The product of and is the closest game to and with reductions to both and . That is to say, it has the weakest adversaries out of all the games with reductions to both and . That is to say, for each other game with reductions to and , we have a unique morphism to making the following commute:

Concretely, adversaries for need to be capable of winning both , as well as . To accomplish this, our messages will actually be and . That is to say, we send messages that belong to one of the games at each point.

At the start of the game, the challenger flips a coin, selecting one of the two games to play at random. From that point on, the state of the challenger follows the state of that game, and they send messages from that game. If they receive responses from the wrong game, the adversary automatically loses. Formally speaking though, our challenger states are also . We have a natural definition of advantage, by using: . This guarantees growth of advantage in reductions.

[!note] Note: TODO: Make this absolutely precise, at least in these notes.

Intuitively, this is an adequate definition, because an adversary that can consistently at this game must be able to consistently win at either game, since they don't know which one they'll be playing in advance.

The dual notion of the product is the sum of two games, which should be winnable by an adversary which can win either of the games, but not necessarily both of them.

Surprisingly enough, the structure of is the same as , with one small change: instead of the challenger choosing which game is played, at random, the adversary chooses, by sending their choice at the start of the game. We also need to choose . You might think that you could still get away with choosing the minimum, but this doesn't work, because the coproduct of a game with some unwinnable game should be a winnable game , so that we have a reduction .

[!note] Note: TODO: Make this absolutely precise, at least in these notes.